Thank you for this opportunity to speak here. I was so excited to come down here that I actually forgot my pants. All my clothes are still at home, and I just brought the materials for this talk for three days. (Laughter)

I'm going to talk about science.

Now, why did they invite someone from the Harvard Smithsonian Center for Astrophysics? Now, you're probably thinking, well, because Harvard has such amazing views about who should teach-- who should be scientists.

Or maybe because I'm from the Center for Astrophysics, where we have lots of telescopes in space, and we search for earthlike planets, and maybe we'll find a planet where they do a really much better job than we do teaching science and we can copy them.

No. I think it's probably because we've been doing pre-college science education, K through 12, since 1985.

We have developed lots of curriculum, like Project Star and Project Aries.

We, I think, deal with cutting edge technologies, like we have the micro-observatory telescope, which I think now has taken over a million pictures. These are five telescopes tied together around the world that take pictures for K through 12 kids every night. We run lots of teacher institutes in Cambridge and also at various sites throughout the U.S.

We develop museum exhibits, the Cosmic Questions Museum Exhibit on the universe

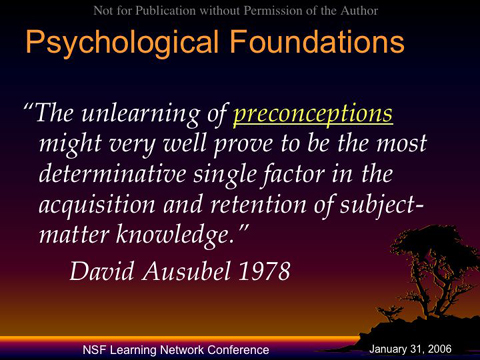

Philosopher is David Ausubel who said the unlearning of preconceptions might very well prove to be the most determinative single factor in the acquisition and retention of subject matter knowledge.

Now, I'm probably very well known for clinical interviews mainly of children concerning their science ideas.

Now, this did not come easy. I was hired at Harvard because I was a middle school math and science teacher, and I can make graphs with ...(inaudible). So I wasn't very good at listening. So my staff got together and got me an aid to listening to students, which I brought with me. These are just much bigger ears.

I can show you what this has produced. And let me back out of this and show you a piece of one of our videos. By the way, these videos, we have a grant to circulate these for free. If you want a copy of A Private Universe or Mind ...(inaudible) videos, you can either give me a business card or send me an email, and I will send you a CD for free. How many have seen A Private Universe? A few. Okay.

[VIDEO]

That's what you get for listening. What you get by listening is you get the student's own ideas. And in this case, less than one percent of the mass of the tree comes from what's in the soil. The majority comes from the carbon dioxide in the air. All these students could talk about photosynthesis and what the chemical equation was for photosynthesis, but they had never realized that the actual mass of the tree is made out of air.

And these kinds of ideas exist prior to instruction. Kids often think them up themselves.

They're embedded in larger structures.

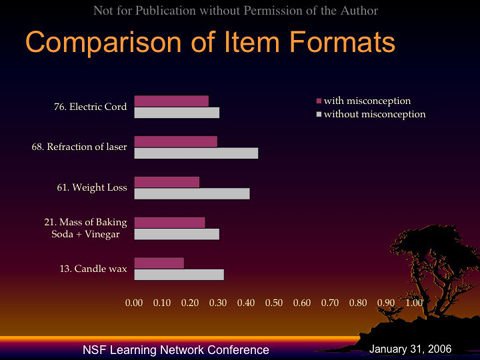

So our criteria for measuring conceptual understanding is not about asking people what the right answer is, it's about asking them to make a choice between the common misconceptions and the scientifically correct answer.

All of our test questions have in them common misconceptions about scientific ideas.

Without those misconceptions, we don't feel these questions are valid. They are just regurgitating what they might have heard before.

So, let me give you an example here. I have a few examples. This one is from the ...(inaudible) physical science standards, NRC standards, motions and forces. The motion of an object can be described by its position, direction of motion and speed. That motion can be measured and represented on a graph.

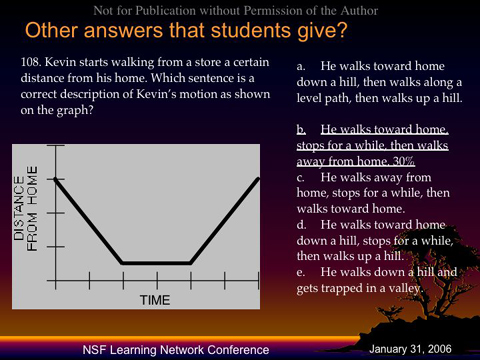

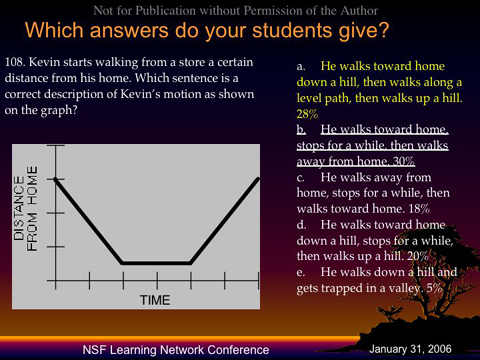

And here is a graph; this is a graph of distance from home here, and time; and the story is Kevin starts walking from a store a certain distance from his home. Which sentence is a correct description of Kevin's motion, as shown on the graph? If I let you discuss this, most people would say, well, a legitimate story one could make is that Kevin starts far from home at the store, and he walks towards his home. He's getting closer to home over time. He stops, and then he walks away from home. Maybe he forgot something at the store.

Now, we could have a question that just had this answer, but we have four other answers. And what I want you to discuss in 40 seconds is, what are some plausible responses from students; and preferably, what do most students say is the story? So you can start now.

That should be enough time for the math teachers. The science teachers would probably take a little longer. Do we have someone who thinks they know what a student might give as an answer? Do we have one possibility here?

He walks done a hill, walking along a flat spot, and then up a hill. Now, a student who thought that would probably-- he doesn't really know how to read a graph of time versus distance.

So, let's see what happens here. Thirty percent of the students choose the correct answer out of five answers.

And here is A; he walks home down a hill; he walks up a level path and up a hill.

And they have other answers, like he walks down a hill and gets trapped in a valley. (Laughter). And indeed, the most popular wrong answer is that he walks home down the hill, a long a level path, and up a hill.

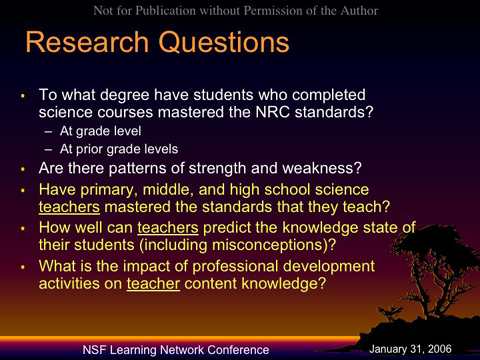

This is the kind of pedagogical content knowledge being able to predict the mistakes that students make. I picked this example because it was mathematical as well as science. So, what we decided to do with a test like this was to measure student knowledge and to measure teacher knowledge as well.

Have teachers mastered this content? Can they get the right answers? And how well did teachers predict the knowledge state of the students they actually teach? So the kind of work that we do is we test teachers, and then the teachers give these tests in their classrooms, and we compare the results.

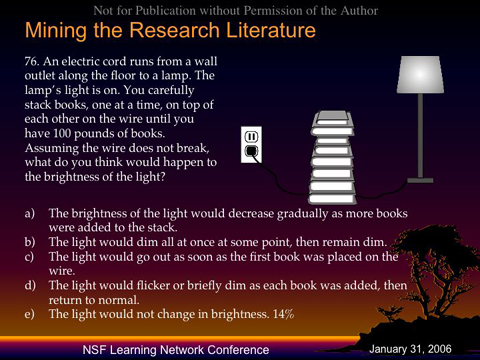

Here's another example. This is about-- how many of you have stepped on a lamp cord to a lamp? A few people. Have you noticed that the light gets really dark when you step on a lamp cord?

Well, I think most people in this room would say, the lamp wouldn't change in brightness if you put a pile of books on top. But yet the most popular wrong answer is the brightness of the light would decrease gradually as more books were added to the stack.

This is in direct contradiction to students' own experiences, and yet they believe that this would happen.

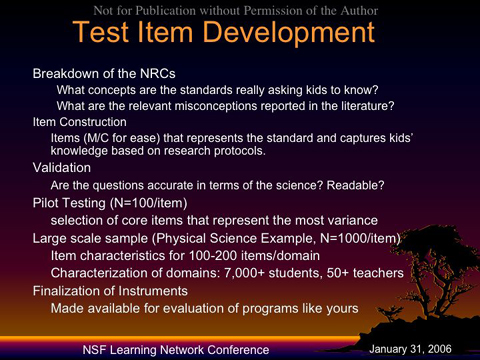

So to develop these tests, we had to break down all of the National Research Council standards and physical science and earth and space science. We had to read all the literature on misconceptions for each one of those standards; and we had to construct multiple items for each standard that deal with all of those misconceptions; try this out on over a thousand kids per item in order to have 100 to 200 items per domain per grade level. So we have a big database of these. And I can show you some of the national data.

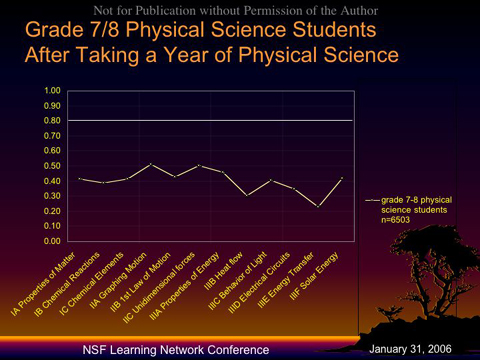

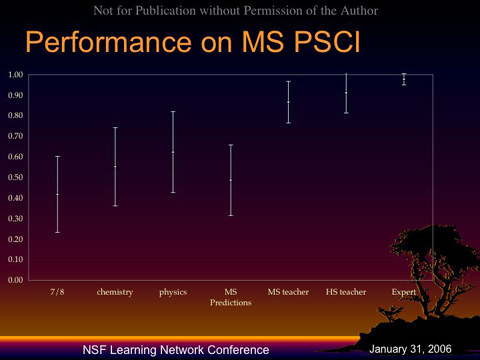

This is for grades seven and eight, for the seventh and eighth physical science students.

This is after taking a year of physical science students perform at this level. This is guessing 20%. This is 40%. Mastery is usually considered to be 80%. And we could not find a single one of the 12 standards in which the children performed at the 80% level or even at the 60% level. Now, these are seventh and eighth grade students.

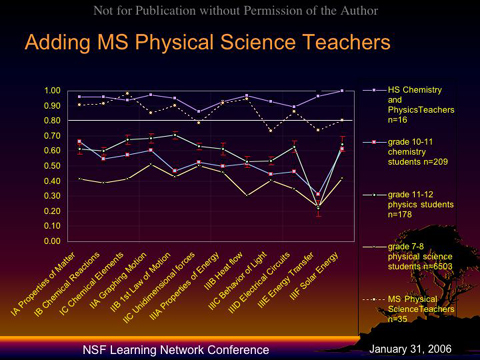

We wanted to see what would happen as they move along, what happens in high school to kids who take chemistry and physics.

Presumably, taking a year of chemistry and then taking a year of physics would improve these kinds of performances, including things like graphing motion, which was the one that you just predicted, and energy-- electrical circuits, which is this one here, the third one in. So let's see what happens by high school.

At the high school level, you can see that in chemistry, the chemistry standards, the chemical properties of matter standards go up; and in physics, this line here, the physic standards go up. Kids have a lot of trouble with energy transferred in general. So even at the high school level, after taking a year of chemistry and a year of physics, students still have not mastered these middle school standards. Now, maybe it's their teachers. So we decided to check the teachers.

Here are the high school teachers, all above-- the high school chemistry and physic teachers are all above the 80% level. You can excuse the chemistry teachers perhaps for not knowing too much about unit dimensional forces, but generally they do well. So, I have another prediction problem for you. When we tested the teachers of these students in middle school, what did we find? Where do middle school teachers rate on these standards? So you'll have 40 seconds to discuss that. I'd like a prediction.

Okay. Do we have someone who works with middle school science teachers here who would like to make a prediction? Does anyone want to make a prediction?

The prediction was that middle school physical science teachers would perform about the level of the high school physics students. And this, when I was at NSF in the fall giving a talk with this graph, they came up with the same prediction. Now, these are-- I forget how many-- 37 teachers. We'll see when we graph it.

These are the teachers of the kids following the bottom line.

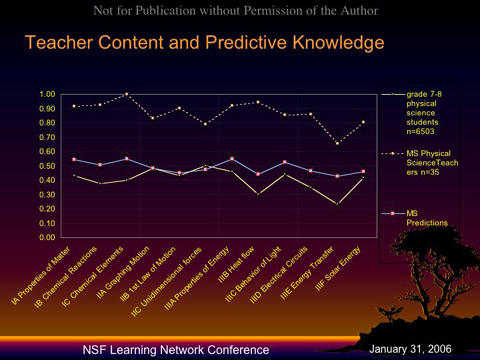

So here are the middle school teachers, slightly below the high school teachers. Remember, these are the concepts that they teach. This isn't the content of junior year college physics and chemistry. These are the contents they teach everyday and perhaps for years. There are a couple of weaknesses. One of them is in the behavior of light, and another is in energy transfer, and perhaps in the dimensional forces again, but by and large, mastery across the domain. And these were-- how many do we have down here-- 35 teachers in the data set. So this is a surprise.

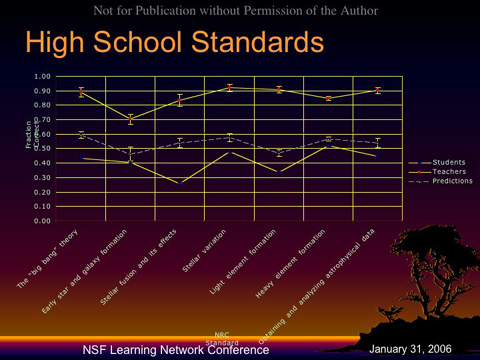

But when we asked them to predict the level of their students' knowledge, we found that just about in all cases, they predicted much better performance of their students than the actual performance that we got in their classrooms. And we decided to look at the comparison between the subject matter knowledge, which looked high, and their predictive capacity, which was good in some cases, but poor in other cases. And also, we did the same thing in astronomy, in space science.

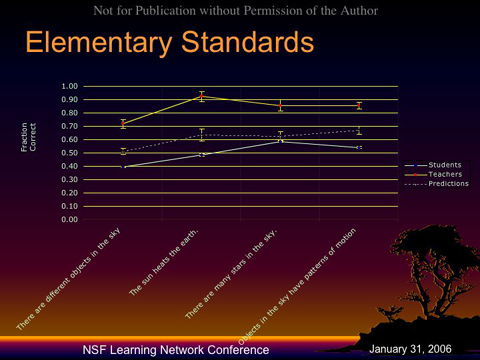

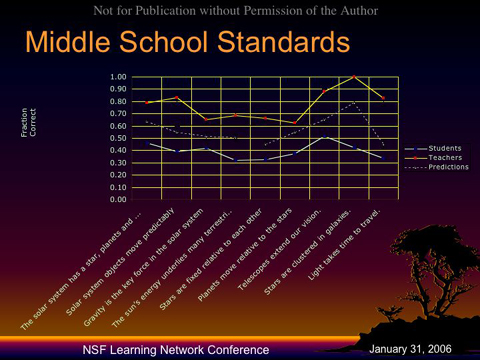

And you can see in astronomy the same profile exists, with more weakness at the middle school level among earth science teachers than either in high school or back here.

In elementary school, the elementary school teachers, the astronomy they're supposed to teach, they by and large know. It's at the middle school that the weaknesses are the largest in understanding of gravity and its effects; how the sun's energy underlies terrestrial processes; how stars move compared to planets; and so on.

So, let's look at some patterns in the classroom data. We found these are the ...(inaudible) of one standard deviation.

So we can see that seventh and eighth graders by and large perform lower than chemistry students or physic students, but there's a lot of overlap. Middle school teachers predict too high for the students they teach. And by and large, middle school physical science teachers, high school science teachers-- and these are the professors' way up here-- do quite a bit better and are out of the realm, generally, of kids at these levels.

What we also found by testing items is that teachers normally make up items which don't include misconceptions.

And if you don't include the misconception, you get much higher performance on items than if you include them. Students are much more easily distracted into picking the wrong answer if it's something they always thought in the past. So, that's why it's so hard to ...(inaudible) these kinds of items.

Now, I want to show you a comparison of some of the data. This is from the astronomy in space science standards.

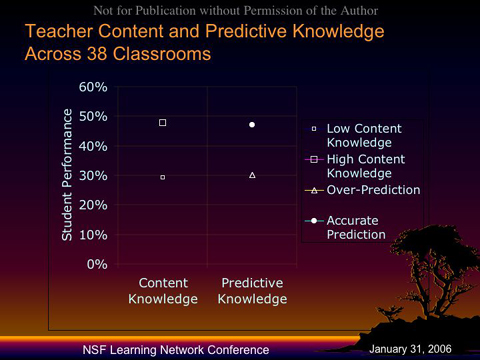

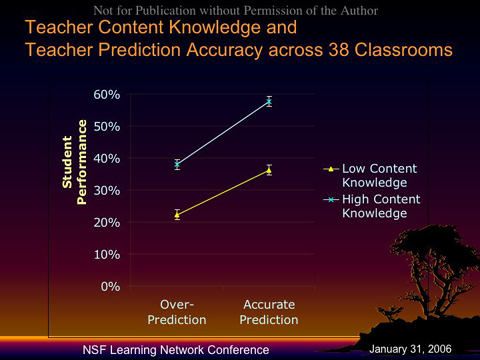

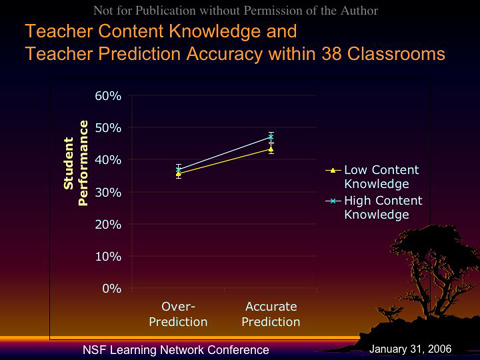

And we found in the student performance in classrooms where the teacher's content knowledge was high, the performance was high, and where the teacher content knowledge was low, the performance was low.

And same thing with this predictive capacity, they were about the same size, in effect, across these classrooms.

But when we look inside the classroom, we looked inside the classroom and looked at which standards did the teachers know well, and which didn't they know well, and how well were they able to predict each of these things, we found a different pattern-- not this one, next one. We found this pattern, which is teachers either accurately predict or over-predict their student performance. And the accurate prediction of teachers was much more of an indicator of the student performance than their content knowledge.

So, this leads me to believe that institutes that primarily try to improve content knowledge for a teacher that thinks his students or her students know a lot, is only going to get very small gains in students' scores. At this level or even if you have teachers that can accurately predict it, it doesn't make much of a difference. But if you have an institute that teaches students-- teaches teachers how to predict their students' ideas, what they are and how to know whether they have them, you can get much larger gains.

Let me move on to some other patterns. So for each standard in each level that we've looked at, we find students have not achieved mastery; teachers generally overestimate the student's knowledge. Teachers know a lot more than their students know. But this knowledge is not a guarantee of student knowledge. And subjects do much better on items if misconceptions are not a choice. So the teacher's knowledge of student ideas appears to be a more important factor than their own content knowledge.

Now, we have a few pieces of research I can show you. We looked at these factors which tend to predict concept knowledge-- predict the content knowledge of teachers, what grade level they teach; gender; the years teaching; and so on.

And this is the regression model here. We found differences by grade ban, difference by gender, gender small differences, years teaching a subject, whether they are certified or not, and then an interaction between the years teaching and the certification.

And this is what the interaction looks like, that teachers who start off certified don't really change in their content knowledge over time.

And teachers who are uncertified, who are teaching in a new field, take about seven years to come up to the level of teachers who are certified. But we were surprised that content knowledge doesn't seem to change for certified teachers.

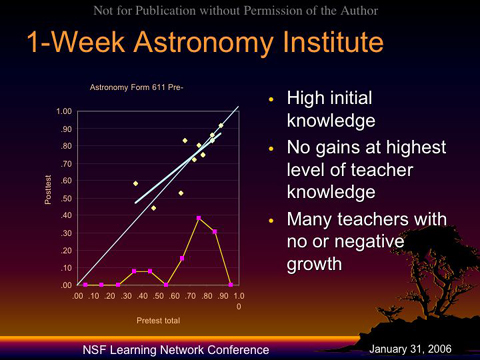

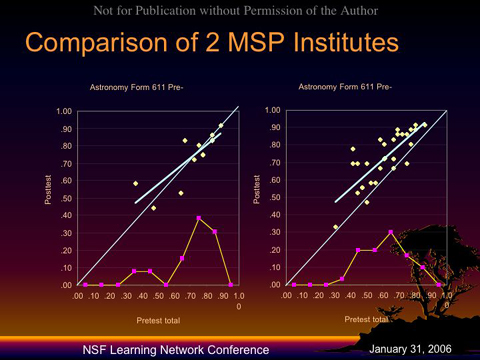

We also assessed last summer two astronomy institutes, two MSP institutes.

This first one was a two-week institute that had teachers go through the activities that students would normally go through in an astronomy course.

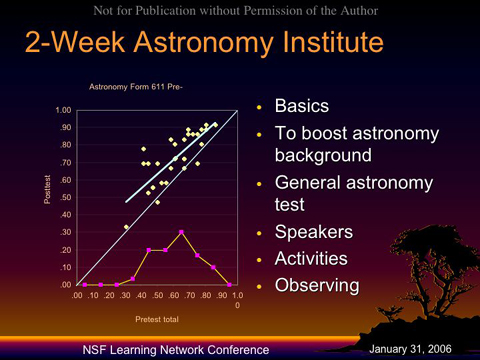

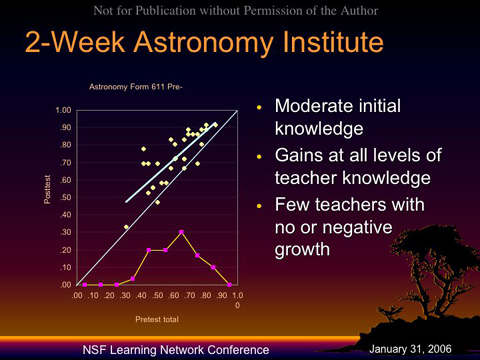

And the idea was to teach the basics and to boost the astronomy background of these teachers. They did some naked eye observing. This is the distribution, the initial distribution of scores. This is the pre-test score versus the post-test score.

And anyone about this diagonal line learns something in the institute or scored higher on the way out than they did on the way in.

We compare this to another institute where the concentration was on doing what scientists do, learning to use astronomical instrumentation, having many more talks by scientists, doing observing, but generally with instruments.

And what we found was that there were many teachers who lost ground, and that the gain was really only apparent for a few teachers down at the bottom level.

And so the comparison, this is just two-- I show this not because it's conclusive, but this is the kind of assessment that we can do in partnership with MSP institutes. If you run science institutes, we may be able to help assess the progress of your institute. We have standardized tests and we have custom tests that we can build to help you assess or evaluate the success of your institute.

So, the patterns that we see. Some teachers show content weakness at all grade levels. Content knowledge changes very slowly over time. Professional development can make a difference. We also found that just because you know of all the high school standards doesn't mean you know all the elementary school standards.

So our future efforts are to-- are in terms of professional development, trying to assess as many programs as we possibly can, and look at the-- also look at the effects of different curricula in the classroom using these new instruments.

Conclusions, I would say-- let's see if there is anything else here.

Conclusions. What do we now know? We now know that misconceptions often go unchanged in students' minds after taking science. They appear to be a necessary step in learning, but the standards are very hard to master as they exist. We find that teachers are knowledgeable, and they have more content knowledge than a lot of people let on. The content knowledge is at a level at which they teach. So teachers are usually very good at the content that they teach.

Teachers generally do not know their students' misconceptions, but they should. A teacher knowledge builds very slowly over time. And we think that professional development must be targeted as specific standards at particular grade levels, and evaluated with relevant tools. We also have a very large project which involves 18,000 college students at 63 colleges and universities. And one of the findings that we have that, if Deborah's plane didn't come in I was going to talk about, is about AP courses.

How many of you worked on AP at the high school level? We have some. The upshot of this very large study-- and the results will be released at the AAAS conference in February-- is in general, AP courses do not prepare students well enough to get advanced placement in college. That on a scale of 1 through 5 for the AP tests, the only students who should place out of introductory science courses are those who score a 6. (Laughter) Now, it may sound funny, but since the AP scores are pegged to the AP tests, a score 5 on the AP test is really only half the problems right.

So there is a lot of room for that six to take place. As a matter of fact, a score of 3 on the AP test is no better-- students do no better than the students who don't take the AP test at all. And a score of 2 on the AP test is essentially what kids in honors science get when they're in high school without taking AP. So the College Board, this is a truth in advertising issue. The AP courses that are taught in the United States are not strong enough to prepare kids for skipping college science.

Well, you can talk about that with me later.

I want to acknowledge my co-investigators and my project manager, and the various people who worked on this project, and all the advice we get from good places. And you can contact me if you want that free copy of A Private Universe or you want to talk about any of this research. And I'll leave you with a quote from Leo Tolstoy that really I find very helpful. Thank you.